Jacob Schmieder

Open to PhD Opportunities

I’m actively seeking a PhD position that blends theoretical physics with machine learning. As a Senior Data Scientist and AI Advisor, I build research-grade infrastructure, mentor interdisciplinary teams, and turn complex datasets into actionable insight. I’m ready to bring that expertise to a doctoral group tackling ambitious, data-driven physics questions.

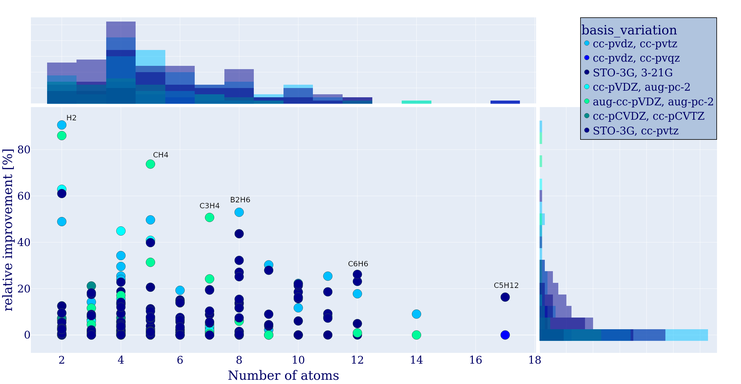

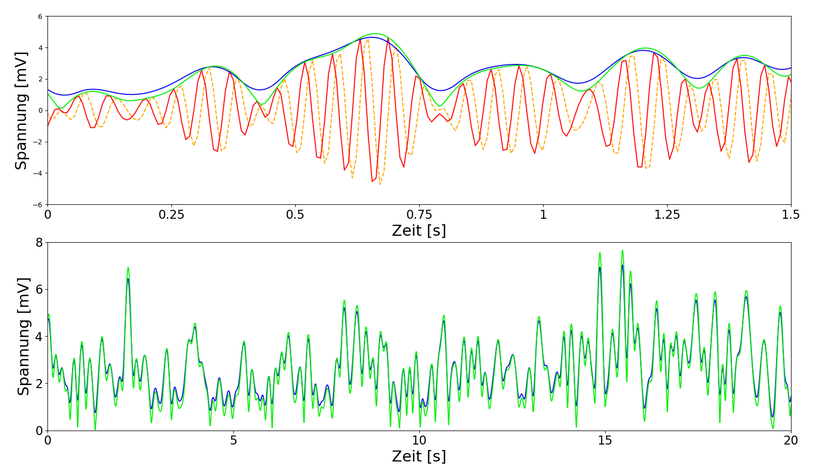

- Focus areas: Machine learning for physics, AI infrastructure, scientific computing

- What I bring: Proven track record of shipping reproducible ML experiments, enabling HPC pipelines, and advising on trustworthy AI strategies

- Availability: Actively speaking with labs and ready for exploratory collaborations now

I’m open already—just tap “Contact me” whenever you’d like to talk.