Jacob Schmieder

Open to PhD Opportunities

I'm a physicist and Senior Data Scientist working with machine learning and AI tools in scientific applications. I'm currently seeking a PhD position where I can focus on physics-informed AI, developing reliable ML methods and scalable AI infrastructure for data-intensive physics.

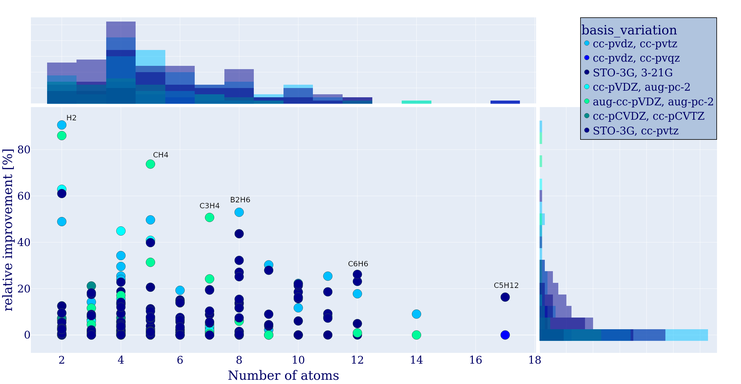

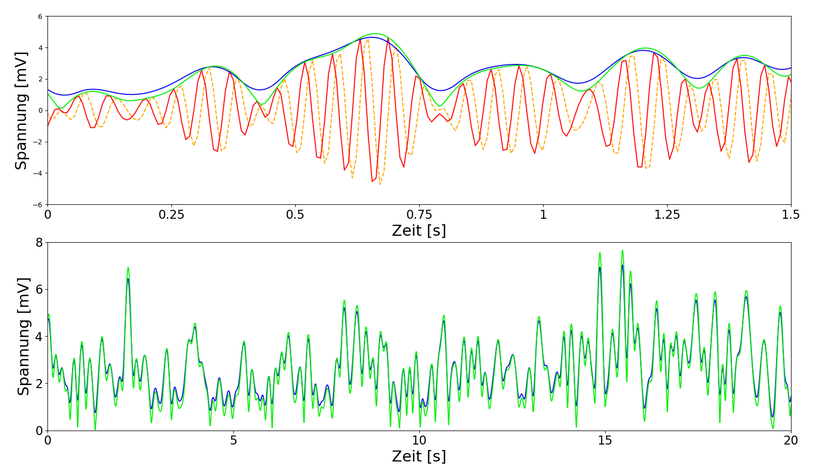

- Focus & research areas: Machine learning in scientific applications, physics-informed ML, probabilistic and uncertainty-aware AI, AI infrastructure, and scientific computing and simulation-driven studies

- What I bring: Experience transforming state-of-the-art AI models into robust open-source products and deploying these systems on HPC and cluster environments

- Availability: Currently seeking groups working in these areas and open to exploratory collaborations

If this aligns with your group, just tap Contact me and let's talk.